An artificial intelligence model outperformed certified radiologists in diagnosing breast cancer from mammogram images, according to a new study. This suggests that the system could be used in clinical settings to help facilitate earlier diagnoses, although future clinical trials will be needed to know for sure.

The study, “International evaluation of an AI system for breast cancer screening,” was published in Nature.

Early diagnosis of breast cancer is critical because cancers are easier to treat earlier on, when the tumor is small and before the cancer spreads. Cancer screening helps in this because it can detect tumors before they start causing obvious symptoms.

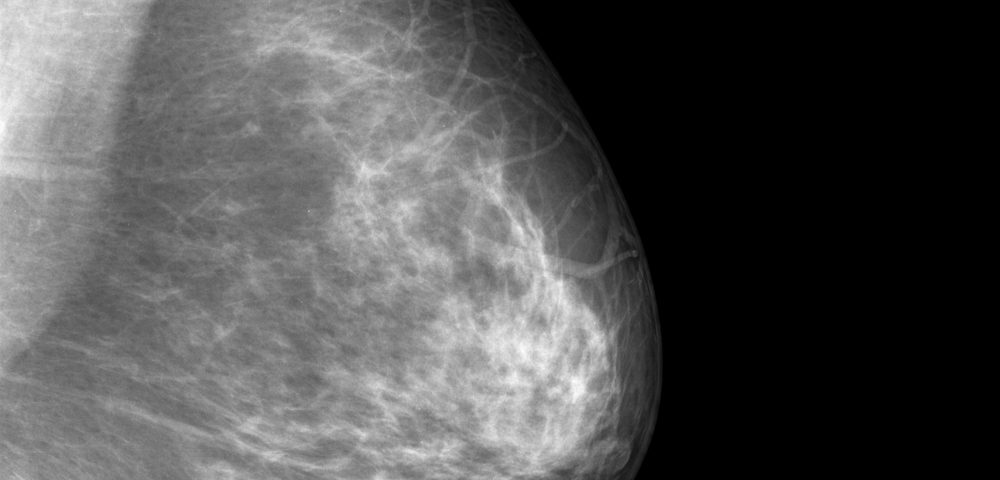

The standard of care for breast cancer screening is mammography, a breast imaging technique.

However, interpreting mammogram images can be tricky, and it’s not uncommon for radiologists to interpret them incorrectly. This can cause false positives, which lead to undue stress and anxiety, or false negatives, which means delaying potentially life-saving treatment.

In the new study, researchers in the U.K and U.S. tried to mitigate this problem by having an artificial intelligence (AI) system — rather than a human — interpret the mammograms.

In principle, this kind of AI involves feeding data to a computer – in this case, both mammograms and cancer diagnoses (based on subsequent biopsies and other tests). Then the computer finds patterns that it can use to predict whether a given mammogram came from a person with cancer. By doing this on a large set of data, the computer can generate algorithms that allow it to distinguish positives from negatives.

In this study, the AI model was trained on information from two datasets, one from the U.S. and one from the U.K., which contained data from 3,097 and 25,856 women, respectively. All those included were biological females, though detailed demographics weren’t assessed, which is a limitation that will need to be addressed in future studies.

In the U.K., mammograms are read by two radiologists before a diagnosis is given, and if there is disagreement, a third opinion is solicited (to reduce human error). In the U.S., mammograms usually are read by just one radiologist.

Since these readings were recorded in the databases, the researchers compared the AI’s findings to those of the radiologists. Notably, the radiologists were presumed to have access to other potentially relevant clinical data, whereas the AI system was given only the mammogram images.

Despite that, the AI outperformed readings done by just one radiologist. The sensitivity and specificity (basically the rates of true-positives and true-negatives, respectively) increased significantly, by 2.7% and 1.2% for the U.K. dataset and by 9.4% and 5.7% in the U.S. dataset.

For the U.K. dataset, the AI results were not significantly better than a second radiologist — but they weren’t worse, either. This suggests that the AI system could be combined with radiologist interpretations, saving on human labor without sacrificing the standard of care being given.

After simulating this scenario, researchers determined “This combination of human and machine results in performance equivalent to that of the traditional double-reading process, but saves 88% of the effort of the second reader.”

The AI also outperformed radiologists in a head-to-head comparison in which six certified radiologists were given 500 sample cases, and their results were compared with the AI’s. Performance was measured using the area under the curve; a score closer to 1 is better, and the AI scored 0.115 higher, on average, than the radiologists.

To evaluate if the AI system could be used in distinct populations, researchers built the algorithm using only data from the U.K. database, and tested it in the U.S. database. Again, the AI system performed better than radiologists, improving the rates of correctly identified cancer cases by 8.1% and the rates of correctly identified non-cancer cases by 3.5%.

This suggests that the AI may have applicability across different groups; though again, the lack of demographic data limits the strength of this conclusion.

There are other noteworthy limitations to this study. For one, purely by chance, most of the mammograms were taken using similar equipment, so it’s not clear whether the findings would have been as strong if there was more variability (as there often is in actual clinics).

Similarly, the study included both tomosynthesis (also known as 3D mammography) and conventional digital (2D) mammography, but didn’t differentiate between the two in the analysis.

It’s also worth noting that previous studies on computer-aided breast cancer detection have shown good results in the lab, but failed to improve detection in practice. So, it should be stressed that these results are spreliminary.

“Prospective clinical studies will be required to understand the full extent to which this technology can benefit patient care,” the researchers wrote.